In the first releases of AHV, the virtual switch was present on the AHV host, but not integrated within the Prism UI. By then, it was quite obscure what the settings on the host were. Nowadays it is really important to maintain identical settings on your host for the bridge br0 en de bond br0-up.

It is still possible to delete the virtual switch with no impact on production workloads on the cluster. However, if a vs0 is present in the cluster, you should be certain that all hosts (or at least the bridges and bonds) are configured identical. Degraded nodes can be the result of an inconsistent virtual switch. And also degraded nodes can be destructive for your Nutanix cluster! Lately I have heard about multiple crashing clusters. Unfortunately, I was the consultant in one of these cases. 🙁

For example; the following configurations are not identical and will result in an inconsistent virtual switch. You notice more interfaces on the first than on the second node… But also the capital T in True on the first node will cause an inconsistent virtual switch.

================== First node =================

Bridge: br0

Bond: br0-up

bond_mode: balance-tcp

interfaces: eth3 eth2 eth1 eth0

lacp: active

lacp-fallback: True

lacp_speed: slow

================== second node =================

Bridge: br0

Bond: br0-up

bond_mode: balance-tcp

interfaces: eth3 eth2

lacp: active

lacp-fallback: true

lacp_speed: slow

Disabling the virtual switch before changing bonds!

Disabling the vs0 is something you need to do, before you change any bond settings on the cvm. The degraded node possibility is too risky. A degraded node can ruin your cluster and I noticed data loss after getting the cluster stable. For those who don’t know

Disabling the virtual switch

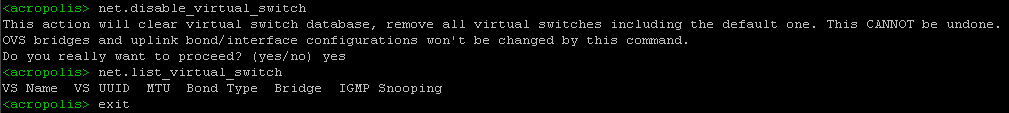

Issue the following commands to delete the virtual switch. These are commands within the acli.

<acropolis>net.list_virtual_switch

VS Name VS UUID MTU Bond Type Bridge IGMP Snooping

vs0 0b65cb01-5f51-421a-b820-969e058b80ce 1500 kBalanceTcp br0 Disabled

<acropolis>net.disable_virtual_switch

This action will clear virtual switch database, remove all virtual switches including the default one. This CANNOT be undone.

OVS bridges and uplink bond/interface configurations won't be changed by this command.

Do you really want to proceed? (yes/no) yes

<acropolis>net.list_virtual_switch

VS Name VS UUID

<acropolis>Changing the bond on hosts

Personally, I don’t take risks when changing network settings on a host. Both the host and the controller VM will enter maintenance mode.

Enabling maintenance mode for the host is arranged by issueing the following command.

acli host.enter_maintenance_mode [IP address of the host]

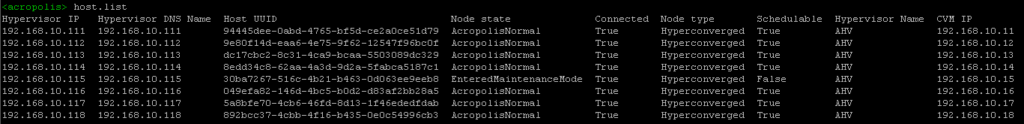

Checking the maintenance mode status of the host is performed by ‘acli host.list’. You will see that the host has entered Maintenance mode. Virtual machines cannot be scheduled on this host.

Enabling maintenance mode for the cvm is handled by the ncli. Issue the command ‘host edit id=XXXXX enable-maintenance-mode=true.

Changing the bonds

Preferred method of changing bonds is the ‘Prism way’. As we already have deleted vs0, we don’t have that option anymore. De commandline is the only way to change the bond right now. It is NOT best practice to use ovs-vsctl on the AHV host. You should only use the command manage_ovs on the Controller VM.

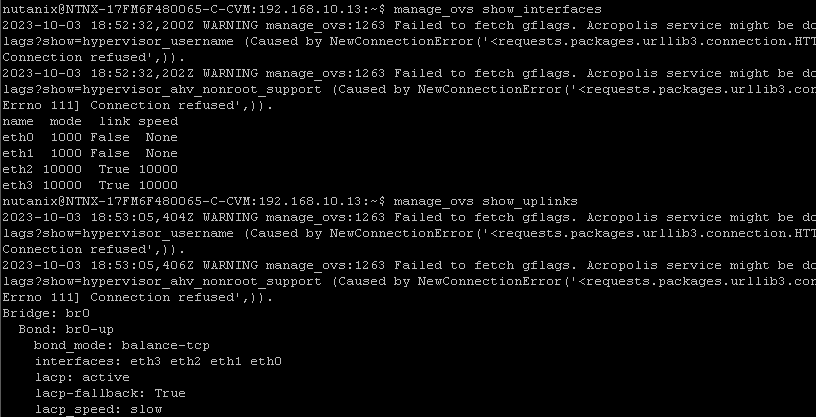

Most useful manage_ovs commands are ‘manage_ovs show_interfaces’ and ‘manage_ovs show_uplinks’. With these command you get information about the connection status of the physical nics of the host and the current bond configuration.

As you can see in the screenshot two unconnected 1GB are in the bond configuration. I want to get rid of them, and also want to change the capital T in True to a lower case in the lacp-fallback.

Don’t get confused by the errors. The command manage_ovs needs the acropolis service and that service is not running as the CVM is in maintenance. Now the command

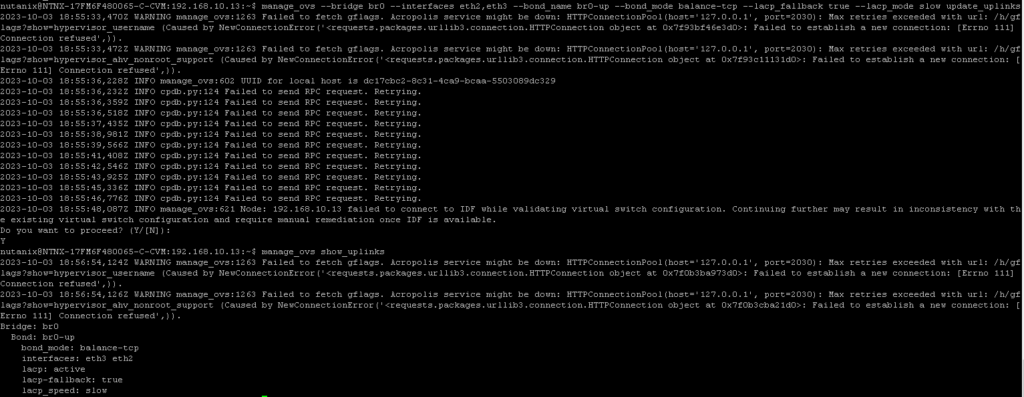

‘manage_ovs –bridge_name br0 –interfaces eth2,eth3 –bond_name br0-up –bond_mode balance-tcp –lacp_fallback true –lacp_mode slow update_uplinks’ is issued and the bond is configured. Never mind again about the errors, and don’t forget to proceed with ‘Y’.

Well, change all the bonds to the settings you want… When you are finished, you can do a final check on the bond configuration, before creating the vs0 again. Just enter the command ‘allssh manage_ovs show_uplinks’ and you can check the configuration of the bonds on all hosts.

Reestablishing the virtual switch

Creating the vs0 again is the last step in this procedure… And it is an easy one…Just issue the following command and the virtual switch vs0 is being recreated.

acli net.migrate_br_to_virtual_switch br0 vs_name=vs0

Leave a Reply